CodexMCP: Simulating 1,100 Realistic ISP Users with Full MAC-Authenticated DHCP

I made all of these changes and additions solo between 5:00 AM and 6:30 AM, before heading into another soul-crushing day of telecom ticket triage. While most people were still asleep or prepping for standup meetings that accomplish nothing, I stood up an entire virtual ISP core—provisioned, authenticated, and observable in real time.

This builds directly on my earlier Docker DHCP stress test, which proved I could scale containers and hand out leases under pressure. That was raw load testing. This was full orchestration, built on relational data, governed by service status, and backed by operational visibility from wire to dashboard.

The Objective

My goal was to emulate broadband provisioning across 1,100 real devices, using nothing but open systems, declarative policy, and code I wrote myself:

- Subscriber identity stored in MariaDB

- MAC-authenticated DHCP provisioning via Kea

- Containers acting like user devices with randomized lease churn

- Real-time observability via Grafana and OpenSearch

- Logic enforcement: no record in the DB = no lease = no network

Everything ran under the CodexMCP simulation stack, my operational cortex for full-stack ISP workflows.

System Components

CodexMCP handles everything: system bootstraps, API delivery, data loading, and simulation orchestration. There are no janky config files, no vendor lock-in, just raw control over infrastructure behavior.

Here’s the full stack I spun up:

- MariaDB — Stores subscriber, service, MAC, and device info

- CodexMCP (Go) — Governs data flow and enforces logic

- Docker — Spins up containers to simulate user endpoints

- Kea DHCP — Enforces MAC-based DHCP leases

- Logstash + OpenSearch + Grafana — Real-time lease tracking and churn graphs

Syslogs and metrics are dual-written for both archival and real-time analysis.

The Database Structure

I moved from synthetic testing into real operational modeling by defining proper relational tables:

subscribers— MACs, status (active,suspended), username, and IDsubscriber_contacts— phone, email, addressdevices— hardware entry points (e.g., DSLAM, fiber splitter)services— Internet, VoIP, IPTV, DNS, etc.subscriber_services— entitlement mapping per user

The DHCP server authenticates against this structure. If your MAC isn’t in the database—or your services are suspended—you don’t get a lease, period.

Bulk User Generation

I wrote a stored procedure to generate 1,000 subscribers safely:

- Avoids ID collisions by checking the last known subscriber

- Generates usernames like

sim_user_####and randomized local MACs (02:xx:xx:...) - Alternates hardware attachment points for diversity

- Assigns default service entitlements:

- Basic Internet

- VoIP line

- DNS resolver

Each record ties into contact info, service maps, and device relationships.

The Launcher Script

Next, I rewrote the Docker bootstrap logic to query the live DB. Below is the full Bash script:

#!/bin/bash

# --- CONFIG ---

DB_HOST="10.0.1.35"

DB_PORT="3306"

DB_USER="codexmcp"

DB_PASS="securepassword"

DB_NAME="codexmcp"

NET_NAME="dhcpnet"

IMAGE_NAME="dhcp-pinger:latest"

# --- QUERY active subscribers with MACs ---

mysql -h "$DB_HOST" -P "$DB_PORT" -u "$DB_USER" -p"$DB_PASS" "$DB_NAME" -N -e \

"SELECT customer_name, mac_address FROM subscribers WHERE status = 'active'" |

while read NAME MAC; do

# Sanitize name for Docker (no spaces, lowercase)

SAFE_NAME=$(echo "$NAME" | tr '[:upper:]' '[:lower:]' | tr ' ' '_' | tr -cd 'a-z0-9_-')

# Optional: Skip if already running

if docker ps --format '{{.Names}}' | grep -q "^$SAFE_NAME$"; then

echo "[=] $SAFE_NAME already running, skipping."

continue

fi

echo "[+] Launching $SAFE_NAME with MAC $MAC"

docker run -d \

--name "$SAFE_NAME" \

--net="$NET_NAME" \

--mac-address "$MAC" \

"$IMAGE_NAME"

done

Each result kicked off a container:

- Name matched the username

- MAC passed in with

--mac-address - Joined the virtual DHCP LAN

- Internal loop scripts inside the containers randomly released/renewed DHCP to create lease churn

No user in the DB? No container. No lease. No network presence.

Lease Events and Visuals

As soon as I launched the containers, Kea started logging:

- Lease allocation rates spiked within seconds

- Short lease times and scripted churn generated constant activity

- Grafana tracked the top-requesting MACs, lease status deltas, and I/O load on the DHCP VM

- Lease churn patterns emerged immediately, with clear behavioral telemetry across a virtual population

All of this came into focus in real time. No polling delay. No post-processing.

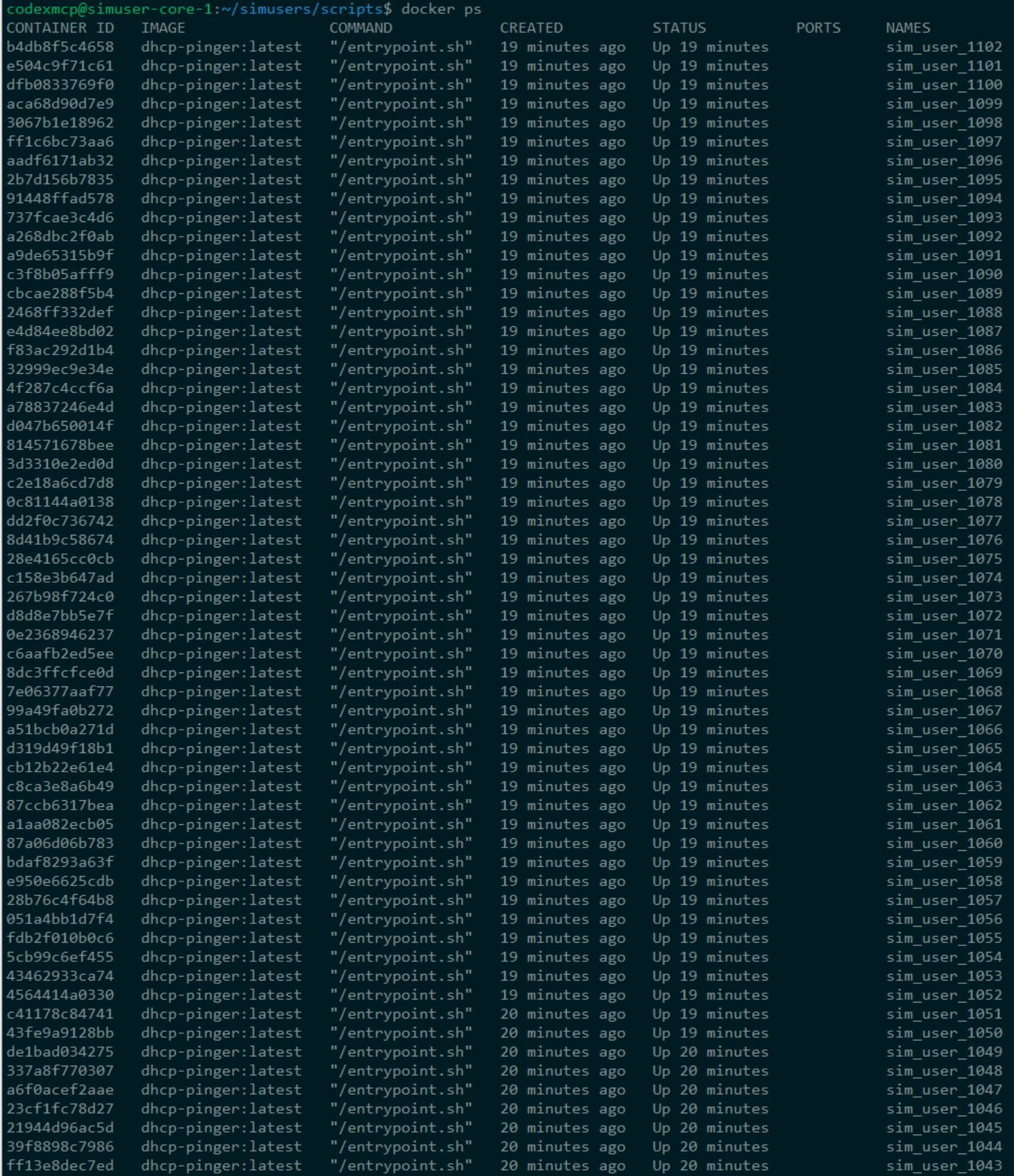

The Result by 6:15 AM

- 1,100 MAC-authenticated virtual users

- Full database-controlled DHCP behavior

- Real-time Grafana dashboards lit up with lease and churn stats

- CodexMCP drove every part of the pipeline—from relational logic to live network state

This wasn’t a “dev environment” theater. This was full-stack provisioning, simulated end-to-end, observable, and policy-compliant.

Why This Matters

This proves CodexMCP isn’t just a dashboard—it’s an operational core. It can:

- Replace outdated provisioning systems

- Enforce network access based on service-level DB state

- Simulate real subscriber behavior for development, testing, or forensic reconstruction

- Deliver full-stack visibility from MAC auth to service entitlement

This is how you build a synthetic broadband system with real services, real data, and functional observability. There are no vendors, no guesswork, just logic and control.

Screenshots

CodexMCP UI – Simulated Users Table

Grafana – Kea Lease Load Graph

Docker Containers Running - Sample

Coming Next

Now that CodexMCP governs DHCP at scale, I’ll extend the simulation stack to:

- Add SIP, IPTV, and DNS traffic

- Trigger network failures and outage scenarios

- Observe user complaints via simulated LLM-based chat agents (GoatSim)

The point isn’t just simulation. It’s control, visibility, and repeatability. And it all runs in my lab, on my schedule, before most dev teams finish their first coffee.

-This Is The Fun Stuff

--Bryan