Parsing Kea: Building Real-Time Visibility with Grok and Simulated Users

Kea is an open-source DHCP server developed by ISC. It offers a modular, high-performance alternative to legacy dhcpd, with support for dynamic lease allocation, flexible logging, and integration with control APIs. This post focuses specifically on Kea DHCPv4, used in conjunction with a synthetic simulation environment to model real-world churn and lease behavior under load.

As part of the larger CodexMCP observability stack, Kea is our edge-facing lease authority. That means when devices boot, reconnect, or churn out, Kea is the first responder. Understanding its behavior—especially under pressure—is critical before layering in more services like DNS, NAT, and eventually SIP registration through GoatMUX.

The Log Parsing Foundation

I built a complete Logstash Grok filter set for 34 distinct Kea log line formats. These include:

- Operational events like

DHCP4_LEASE_ALLOC,DHCP4_LEASE_ADVERT,DHCPSRV_MEMFILE_LFC_START - Error conditions like

DHCP4_CONFIG_LOAD_FAIL,DHCP4_INIT_FAIL - Debug-level traces that reveal internal lease state churn, MAC handling, and edge-case rejections

In standard INFO logging mode, only three event types typically show up:

DHCPSRV_MEMFILE_LFC_START→ periodic cleanup triggerDHCP4_LEASE_ADVERT→ OFFER sent to a clientDHCP4_LEASE_ALLOC→ successful lease handed out

However, for a period during this experiment I enabled DEBUG logging to map additional structural patterns. I won’t claim I caught every log line Kea can emit, but I’ve captured the bulk of what matters in normal and high-churn environments.

These Grok filters are not the final destination—they’re a prototyping scaffold. The actual production engine, TSPG (Time-Sliced Parallel Grep), will rewrite these as fast split-based extractors, bypassing regex entirely.

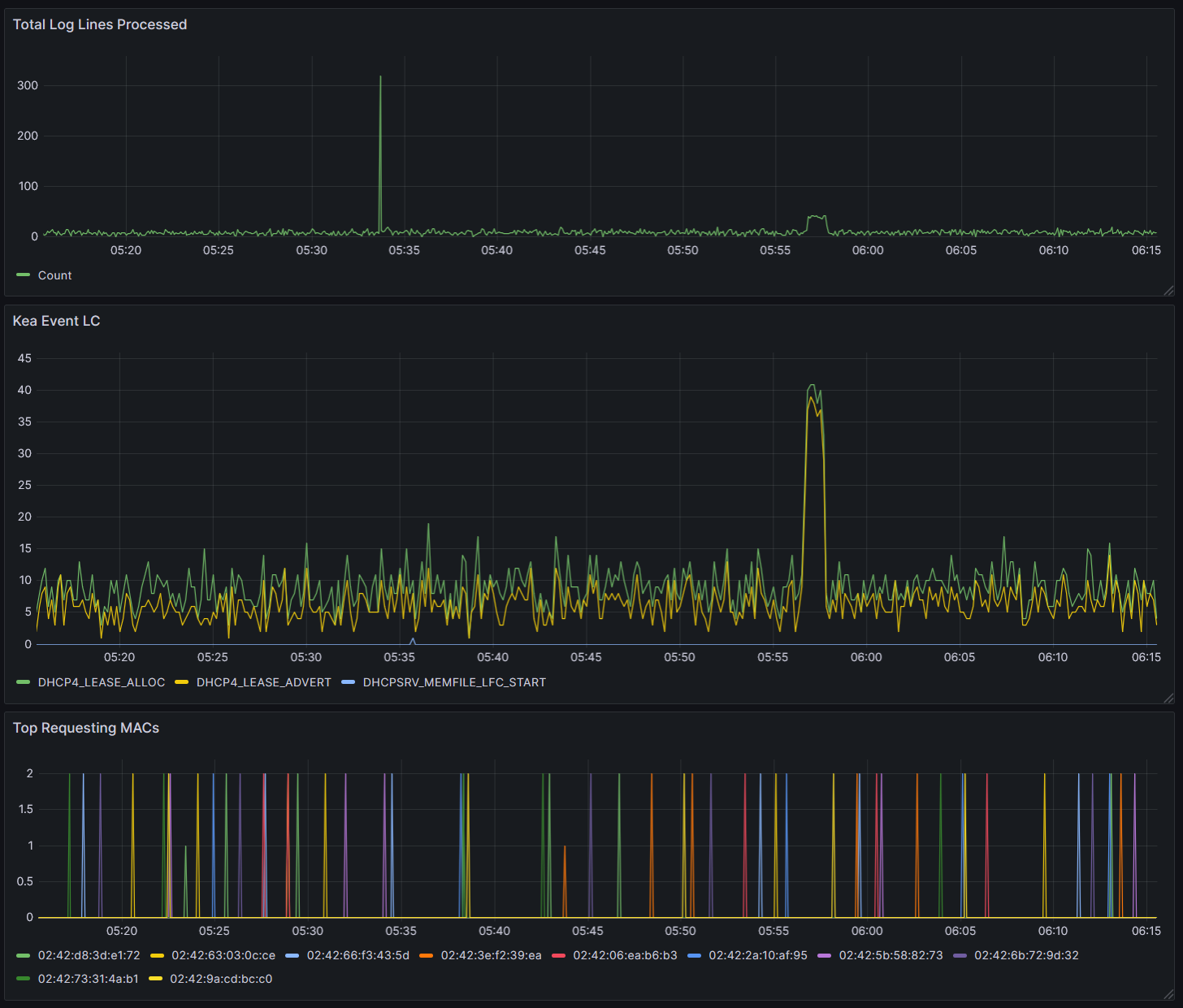

Simulating Real Churn with Docker Clients

To pressure test the Grok patterns and Kea's behavior under load, I spun up 2,011 Docker containers, each emulating a DHCP client. This created authentic lease activity over time, with clean spikes, renewals, and log bursts. Then, I added 100 more Docker clients at once to provoke a visible lease allocation spike.

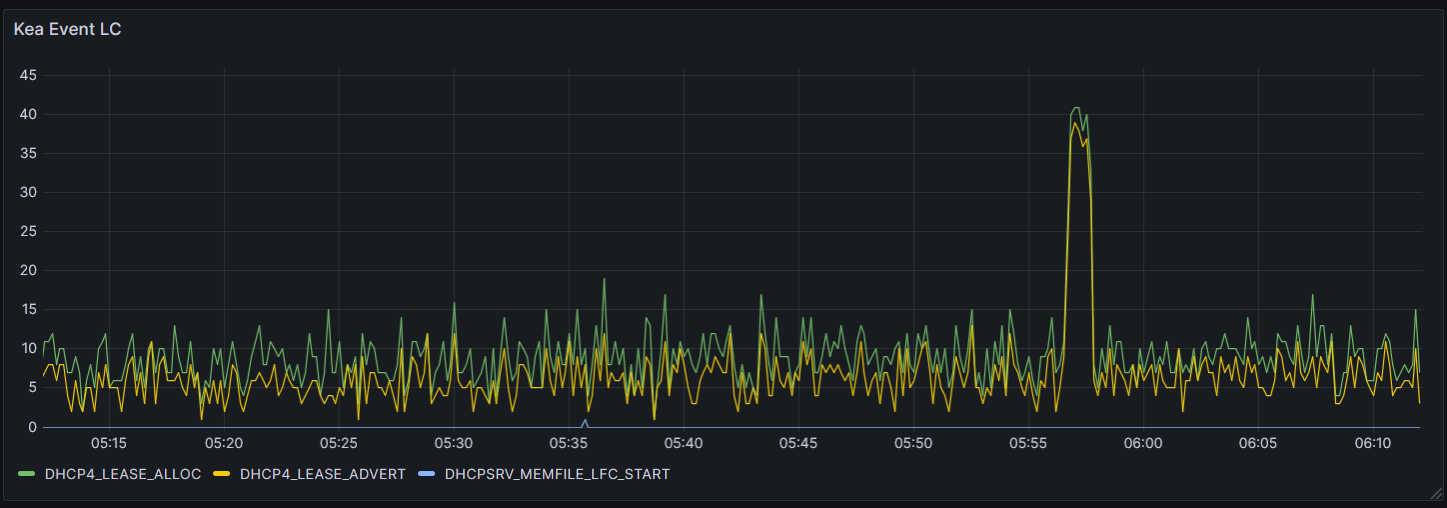

You can see the result in the Grafana panel below. From 05:56 to 05:58, there’s a clear surge in both ADVERT and ALLOC events, peaking in perfect sync:

This wasn’t random noise—this was synthetic but realistic churn, scripted and observable. As leases begin renewing within an hour, we’ll see a second-wave churn pattern emerge, validating how Kea handles re-authentication and retention.

Why This Matters

In real-world networks—especially in rural telco environments where I’ve spent decades—churn isn’t constant. It comes in waves: power flickers, reboot storms, firmware pushes. When thousands of CPE devices all re-DISCOVER within a 90-second window, the DHCP infrastructure must hold.

By logging, parsing, and visualizing every step of Kea’s response, we can:

- Detect when lease offers aren’t being accepted (client-side or network issues)

- Watch for timing skews or processing delays during LFC events

- Tie specific MACs to abuse, overload, or retry loops

- Validate that CodexMCP’s user observability core is stable under load

What Comes Next

This isn’t the end—it’s a staging ground.

- These logs and metrics will be fed into GoatWatch, the in-house analysis and alert engine

- The parsed structure will be ported to TSPG, restructured into high-speed, split based log ingestion

- As we bring GoatMUX online, SIP clients will begin registering, adding layer seven churn visibility to the stack

- The CodexMCP interface will eventually tie it all together, providing end-to-end visibility from DHCP to voice signaling

Conclusion

Logstash was just the proving ground. The real value lies in the precision: we now understand what Kea says, when it says it, and why. From here, we can scale this out, model realistic ISP behavior, and eventually let synthetic users interact, fail, retry, and recover—all under full watch.

This is the beginning of operational truth, parsed cleanly.

-There is always data to process

--Bryan