Taming the CMS Flood: A Log Enrichment Pipeline Rebuild for Calix ONTs

In the field, ONTs don’t lie—they scream, especially when thousands of them are talking at once.

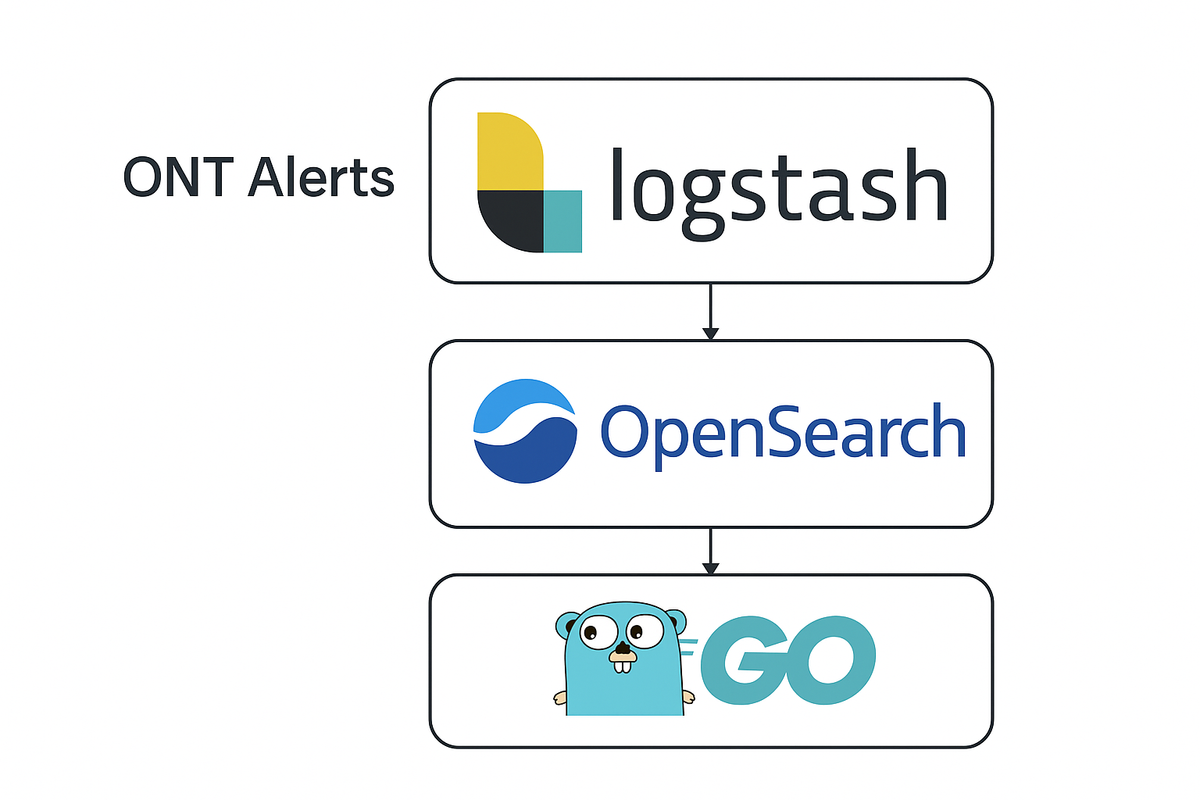

We’ve been running a pipeline where ONT alerts, mostly from Calix E7 cards and their child ONTs, flow into Logstash for processing. This gave us visibility into late collisions, link flaps, threshold crossings, missing ONT, dying ghasp, RF port signal degradation, and well, everything else in near real-time. But enriching those raw syslog messages with metadata like subscriber name, provisioned profile, and optics levels meant tying them to CMS. And that’s where the trouble began.

The Original Pipeline: Useful But Fragile

Here’s how the original pipeline worked:

- Syslog message arrives (UDP port 1514) and gets parsed in Logstash using a multi-pattern Grok filter.

- If the message contains

shelfNameandontID, a Ruby filter shells out to a Perl script that performs SOAP calls to the Calix CMS API. - The script logs in, queries the ONT for status, performance data, and config, and enriches the log message with subscriber details and ONT state.

- Logstash merges that JSON output back into the record and sends it to OpenSearch.

Sounds slick—until 50 ONTs trip alarms in 2 seconds.

The Problem: CMS Under Siege

This setup worked great under low volume. But the second multiple ONTs sent alarms in quick succession, Logstash launched multiple Perl processes simultaneously. Each one opened its own CMS session and triggered three SOAP calls per ONT:

- Operational status

- Performance metrics

- Configuration state

The CMS backend couldn’t handle the spike. It would lock up under the load, and sometimes entire cards stopped responding to CMS altogether. The recovery time was ugly: even after killing Logstash and the Perl zombies, CMS wouldn’t stabilize for 10 to 15 minutes. That’s 15 minutes of blind operations on a system our NOC relies on every hour of the day.

The Pivot: Raw Logging and Decoupled Enrichment

So I pulled the plug on the inline enrichment. The Ruby launcher is now gone from the pipeline. Logstash still collects the raw logs—syslog from the E7s with ONT IDs, timestamps, ports, event types, and GPON details—but they’re no longer enriched inline.

That data is still valuable, but it creates a pain point: matching an ONT alert to a user now means manually cross-referencing shelf + ONT ID against other tools or databases, which is not ideal when you're triaging issues on a live circuit.

Long-term, I’d like to connect this directly to our Elation+ provisioning system. If that backend has an accessible API in its latest version (TBD), it could give us direct access to user IDs and subscriber mappings. But I haven’t had the cycles yet to chase that down.

The New Design: ont-log-enricher in Go

To fix this the right way, I rewrote the enrichment logic in Go. Sure, I could’ve done it in Perl and probably had it working by lunch. But the Perl ecosystem isn’t what it used to be, and with Go, I get a single compiled binary with everything I need—no external modules, no fragile installs.

The new tool, ont-log-enricher, OpenSearch queries periodically for logs that are missing enrichment. It looks for records that:

- Are less than an hour old

- Contain

shelfNameandontID - Are still missing

description(the field that implies they haven’t been enriched)

It de-duplicates polling by combining those fields and only querying CMS once per unique ONT. Then it pushes the full set of ONT details—model, software version, subscriber ID, optics levels, uptime, and more—back into all matching OpenSearch records using bulk updates.

Highlights

- We only scan each ONT once per run, no matter how many times it appears.

- We don’t poll older events—only fresh logs within the last hour.

- It uses three SOAP calls per ONT, just like the original Perl script—but now sequentially and safely, not in parallel bombardments.

- We can gather information from multiple ONTs with only a single SOAP login and logout, which saves a lot of time.

- It can run from cron every minute, or as a lightweight daemon if you need more frequent updates.

Yes, we lose some time resolution on ONT data points, CMS isn’t being polled in real time anymore. But we gain something far more important: stability and confidence that CMS won’t get jammed by its own event flood.

Outcome

- No more CMS lockups

- No more pileups of zombie Perl processes

- Syslog pipeline is stable

- Enrichment happens asynchronously and safely

- The new Go tool can be extended or API-wired later as time permits

And best of all, the NOC can still see what matters—who the ONT belongs to, what profile it’s running, what optical levels look like—without risking service-wide outages from over-polling.

Next Steps

This system will keep evolving. I’ll likely add:

- Error handling and retry queues

- Configurable thresholds for CMS polling rate

- Optional integration with Elation+ if an API is available

- Broader ONT inventory syncing with passive updates

But for now, this is a solid foundation. Raw logs in, smart enrichment out, and no more battles with CMS overload.

These are the kinds of things I think about and pull off daily while still working in a busy ticket queue and handling other NOC duties.

--Something New Every Day

-Bryan